In a box somewhere, I have a book from early in my professional career: Computer Assisted Reporting.

That’s how old I am. The idea of using a computer to help a reporter gather the news was new enough that it warranted a book. If a cub reporter today saw that book she might quip, “What’s next, a book on Air Assisted Breathing?”

I wonder if a few years from now people will look at books on AI the same way. While AI is new in many ways, it is also the natural extension of computers and I think soon it will be integrated into everything the way computers are now.

That is why the board of JournalList grappled for a long time with AI questions, and if there was a place in the technology in the trust.txt ecosystem. But after much deliberation we have decided that there is a problem that can be solved that is both a significant problem and one that doesn’t have a ready made solution, until now.

For AI to work, it needs to be trained. The training comes on Large Language Models. Those models are made up of huge sets of data, mostly words. What has become clear recently is that the systems that do that language training have been using text that they didn’t have permission to use. They also were not using text that was explicitly prohibited from being used in an LLM.

That is the gray area. The law and the rules and the etiquette and all of the ways that automated systems have interacted in the past are fuzzy. They will be worked out in the courts and in standards bodies over time. But there is one small part that can be solved right now, and so the JournalList board has voted unanimously to do just that.

After a review process, we will be releasing a new version of our Specification document with a new variable. What that means is that any publisher of any website can make it clear if they are fine with LLMs training their enormous data sets with the content from that site, or if they would prefer not to be included.

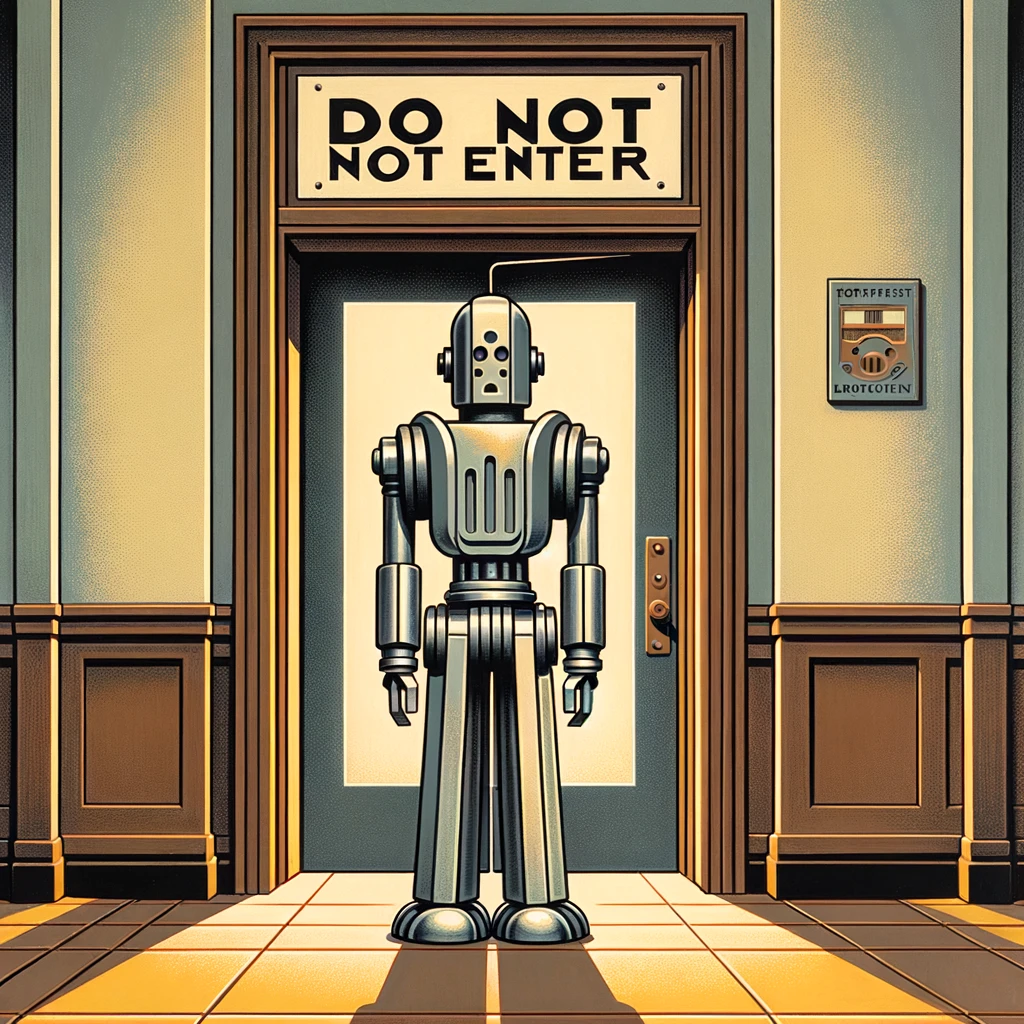

Now, as with everything on the open web, this is not an iron-clad door that will keep everyone out. But it is the first instance that gives voice to web publishers to be able to express their clear intent to the AI robots in a machine-readable way.

And from now on, if the owner of an LLM uses data from a site that has specifically said it does not want its content used, well, we think there will be at least the opportunity for some sort of consequences.

For those of you with trust files, the variable looks like this:

datatrainingallowed=yes

or

datatrainingallowed=no

Just place that in your trust file anywhere. If you want, you can add a comment above it such as: # AI disclosure

Will this work? Certainly a system that is sophisticated enough to ingest all the content from a site could make a quick stop to look at a site’s trust.txt file to see if it has posted the equivalent of a No Trespassing sign. If it keeps on hiking, well, it may get away with it or it may not, but the operators can’t claim that they didn’t see the sign.

The new version of the spec is now out for review and should be published soon. But there is no need for you to delay in posting your own trust.txt file now.

If you have any questions about how to do that, or anything else, please contact us.

Thank you.